Maximizing the Value of Indicators of Compromise and Reimagining Their Role in Modern Detection

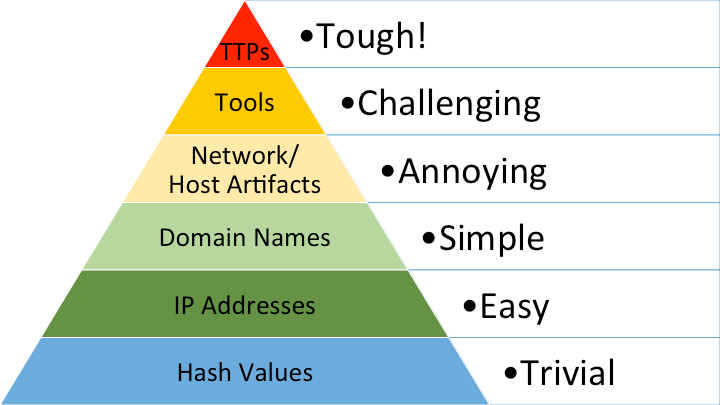

You'd be hard-pressed to find a detection engineer who doesn't know the Pyramid of Pain[1]. It, along with MITRE ATT&CK[2], really solidified the argument for prioritizing behavioral detections. I know I’ve used it to make that exact point many times.

Lately, though, I've wondered if we’ve pushed its lesson too far. Have we become so focused on TTPs that we've dismissed the value at the bottom of the pyramid? The firehose of indicators is a daily reality, and it's time our detection strategies caught up by exploring a more pragmatic approach to their effectiveness, their nuances, and how to get the most value out of the time we are required to spend on them.

What is an Indicator of Compromise?

To make sure we're all speaking the same language, it's helpful to establish clear definitions. The term "indicator" can even refer to behaviors. For clarity, while I don't totally agree with how they define them, this post will use the distinction made popular by CrowdStrike, which separates indicators into two main categories: Indicators of Compromise (IOCs) and Indicators of Attack (IOAs).

Defining Our Terms: IOC vs. IOA

To make sure we're all speaking the same language, it's helpful to establish clear definitions. The term "indicator" can even refer to behaviors. For clarity, this post will use the distinction made popular by CrowdStrike[3], which separates indicators into two main categories: Indicators of Compromise (IOCs) and Indicators of Attack (IOAs).

Here’s a breakdown of how they differ according to CrowdStrike:

| Indicator of Compromise (IOC)[3:1] | Indicator of Attack (IOA)[4] |

|---|---|

| Reactive: Focuses on evidence that a breach has already occurred. | Proactive: Focuses on signs that an attack is in progress. |

| The "What": Represents the artifacts and digital forensics left behind by an attacker. | The "How": Represents the sequence of actions and behaviors an attacker is using. |

| Goal: Helps investigators understand the impact of a past incident. | Goal: Aims to detect and stop an attack before it can cause damage. |

| Examples: Malicious file hashes (MD5, SHA1), known C2 server IP addresses or domains, suspicious registry keys, or specific file names. | Examples: A process executing from a strange directory, lateral movement using stolen credentials, or a script attempting to disable security controls. |

The IoC Detection Catch-22

You'll notice CrowdStrike emphasizes a key distinction:

"An Indicator of Attack (IOA) is related to an IOC in that it is a digital artifact that helps the infosec team evaluate a breach or security event. However, unlike IOCs, IOAs are active in nature and focus on identifying a cyber attack that is in process. They also explore the identity and motivation of the threat actor, whereas an IOC only helps the organization understand the events that took place."[4:1]

IOAs explore the "identity and motivation of the threat actor," while an IOC "only helps the organization understand the events that took place."

While IOCs can be used for detection, experience shows they are often more valuable for retroactively confirming if a breach occurred.[5] Their use in real-time detection is often plagued by the noise of false positives and negatives because indicators go stale so quickly. An IOC's shelf life can be just days after appearing in a threat feed. For those buried in in-depth blog posts, the indicator may already be weeks old by the time it gets through a marketing review cycle and is finally published.

Because of this, the industry has rightfully shifted toward behavioral detections (IOAs). This has made life much harder for adversaries on the endpoint, with many now actively avoiding systems with an EDR installed. This success, however, highlights the continued need for other solutions like Network Detection & Response (NDR)[6][7]. So if behavioral detections are king, why do we still bother with IOCs at all?

For one, no organization wants to be the one breached by an adversary using a domain name that was publicly identified as malicious months earlier. This fear applies to most types of IOCs, so as an industry, we continue to collect and use them for detection. EDR tools, for example, often use a tiered approach: a small, high-fidelity list of IOCs is kept on the endpoint for immediate blocking, while larger lists are checked once telemetry reaches the cloud to avoid performance issues. Many services also perform retroactive hunts against historical data using newly discovered indicators.

This reality has led many organizations down the path of "more is better." They ingest every available threat feed, which often results in alert fatigue, time consuming investigation, and a constant, effort to evaluate and maintain the feeds. A major waste of resources and a moral killer for SOC analysts. When it comes to IOC based detection, quality is everything.

There's been a lot of great research on using LLMs to automate threat intel ingestion from blogs and reports. But while we're getting better at extracting more data, there's been little focus on how to make that data more useful for detection, or if its even worth the effort.

Not All IOCs Are Created Equal

When evaluating an IOC's value, the immediate thought is often its type—whether it's a hash, IP, or domain. But a far more critical factor comes first: its context.

An indicator without context isn't threat intelligence; it's just data. A raw list of "malicious IPs" is nearly useless for an investigator. If an alert fires without any associated contextual information, it can trigger a time-consuming investigation that often ends in a false positive. The value of an IOC grows with the quality of its context.

-

Minimal Context. An IOC with basic metadata, like an associated malware family name, is a major step up. If an analyst gets a hit on an IP address tied to a specific malware family, they have an immediate starting point for what behaviors to look for.

-

Enriched Context. More valuable are enriched IOCs, the kind common in commercial feeds or MISP servers. This context might specify a domain's role (C2 vs. payload delivery), tag a threat actor, or detail the payload it hosted. Knowing a domain was delivering specific maldocs is an excellent starting point for an investigation. This combination of rich detail and timely delivery makes these IOCs the sweet spot for detection, extending their useful shelf life.

-

Full Intelligence Report. An IOC backed by a full write-up is often the most detailed. The trade-off, however, is timeliness. By the time a report is written, edited, and approved by marketing, its indicators are often stale, making them better suited for retroactive lookups than for real-time detection. Despite this, these IOCs should still be used for detection; the volume is typically low, and a hit provides the highest level of context, signaling a potentially serious event and write ups (usually) focus on high impact indicators.

The Atomic Indicators, Re-examined

The Pyramid of Pain focuses on three foundational IOC types: Hashes, IP Addresses, and Domain Names, with hashes being the easiest for an attacker to change and domains being the most difficult.

Hashes

As mentioned earlier, traditional cryptographic hashes are the easiest indicators for an attacker to change. They are brittle by design; modifying a single bit in a malicious file creates a completely new hash, rendering the original indicator useless. Malware is often polymorphic, generating unique hashes for each target to evade detection.

This isn't just a theoretical weakness. In his paper, "Stop Using Hashes for Detection," Pyramid of Pain creator David Bianco provided data to support this very point. His analysis of a large dataset of malware submissions found that the vast majority of malicious hashes were only ever seen by a single organization. In fact, hashes submitted more than 10 times accounted for a mere 0.11% of the total files. This strongly suggests that relying on third-party hash lists for proactive detection is inefficient, as you are statistically unlikely to ever encounter most hashes from a given feed.

When Hashes Still Have Value

Despite their limitations in proactive detection, hashes excel in specific scenarios:

-

Incident Scoping: Bianco notes that hashes are extremely effective for scoping after you have a confirmed-prior infection. Once you identify a malicious file on one system, its hash becomes the perfect tool for hunting across your environment to find other instances of that exact file.

-

Tracking Dual-Use Tools: Hashes are also valuable for tracking legitimate but potentially malicious tools like

nmapor specific versions of RMM tools.[8] Attackers often use these legitimate tools to blend in. By maintaining hash sets of these tools, you can create high-fidelity alerts when they appear in unexpected places. While any static detection can be evaded, the goal is to force an adversary's hand. Forcing them to modify a standard tool, for example, by adding padding or using a packer to change its hash, creates new opportunities to detect those evasive behaviors in their own right. I track a large set of dual use tools in the CelesTLSH hash database.

Fuzzy Hashes

Not all hashes are created equal. Fuzzy hashing algorithms like TLSH[9][10] are resistant to minor modifications, allowing them to detect modified versions of the same file.[11] This makes them harder for an adversary to evade, but it comes with the potential downside of potential false positives[12].

A slight nuance that I'll add is that when more file metadata than just the TLSH hash is available for deciding whether or not to alert, can dramatically improve the fidelity. These aren't a silver bullet and can be evaded as the paper points out, however as I mentioned above, everything can be evaded, and it can be great for threat hunting and detection and is much more powerful than a simple cryptographic hashes.

While there are many variants of IOCs, these three levels effectively illustrate the pyramid's core principle: an indicator's value is directly related to the pain it causes an adversary to change it.

Side Note: This paper[11:1] is really wonderful and matches my own real world findings. I'm going to release an article in the next couple of days on some of the results I've seen of running a fuzzy hashing scanner in production environments for detection, and some of the things I've found that really help mitigate the false positives and make it more useful for real world detection.

IP Addresses

The rise of the cloud has reduced the effectiveness of IP addresses as IOCs. So much malicious activity now originates from shared infrastructure, where an IP may just point to a major cloud provider. Anecdotally, while hashes are prone to false negatives, IP addresses are the most prone to false positives and should be expired from detection lists quickly.

Gaining More Value: In environments limited to Netflow or VPCFlow, IP-based IOCs are more valuable. If you face high false positives, try targeting your IP-based alerts to specific network segments such as those with limited visibility or with high security requirements. Cardinality is also key: a blocked inbound connection attempt from a reportedly malicious address is noise, but an outbound connection with a completed three-way handshake is interesting.

Fortunately, IP addresses have a ton of enrichment possibilities. Combining IOC hits with data like Geo-location, ASN, or whether an IP is a known TOR node or commercial VPN can significantly reduce noise rather than alerting on an IP Address in isolation.

Domains

Domains are perhaps the most nuanced and powerful of the atomic indicators. To understand why, let's break down a URL like https://super.evil.example.com/api/v1/something:

A breakdown of the above:

superis a subdomainevilis also a subdomain.comis the Top Level Domain (TLD)example.comis the domain namesuper.evil.example.comis the full-qualified domain name (FQDN)/api/v1/somethingis the URIhttps://super.evil.example.com/api/v1/somethingis the URL

Retroactive Domain IOCs

Once a malicious domain hits a public feed, it's often no longer in use for more than a few days. A major challenge with domain IOCs is avoiding false positives from legitimate, popular sites, which are tracked by projects like Living Off Trusted Sites (LOTS) Project[13]. To avoid this, you can filter your domain-based IOCs against the LOTS list or use a public reputation list like the Tranco top 1 million[14].

A good rule is to only allow a domain in your IOC list if the indicator is the full, specific URL, however this is really a different type of IOC and they generally don't share the same feed. Because of this getting a full URL when possible can be more useful as if a LOTS site is used, it can be specific to the actual malicious content, however TLS encryption often prevents this for most organizations.

Malicious domains research

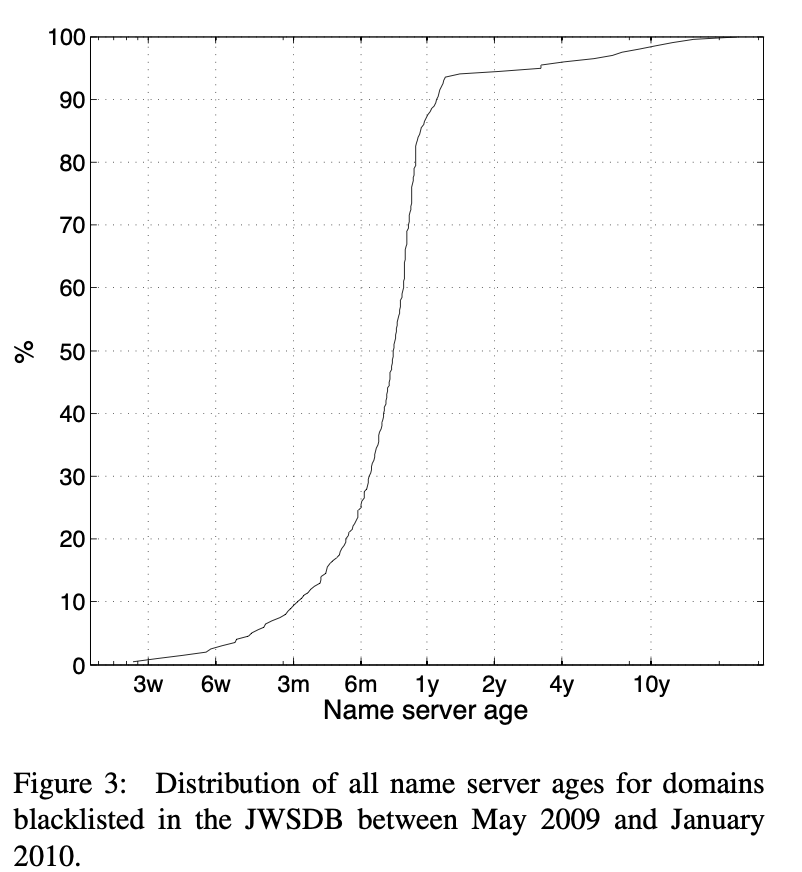

While threat actors compromise legitimate sites that have been around for ages all of the time as well as LOTS based sites being a great exception, it is much more likely for a malicious domain to be much younger (less than a year old). As Mark Baggett mentioned in an old SANS webcast[12:1]:

When phishing domains are stood up, they typically have a rather recent ‘born-on date,’ if you will, for their domains. Google.com, Microsoft.com, all of these legitimate domain name registrations have been out there for a very long time. By automating the retrieval of WHOIS information, and seeing how long a domain has been on the network, we can attempt to identify some of these phishing domains

This is actually backed up by research via the paper On the Potential of Proactive Domain Blacklisting[15] which states:

We use an age of less than one year to indicate youth—as shown in Figure 3, almost 90% of NSs involved in hosting malicious domains are younger than a year.

I recommend reading the entire paper as it is very well written.

IOC's Can Have... Behaviors?

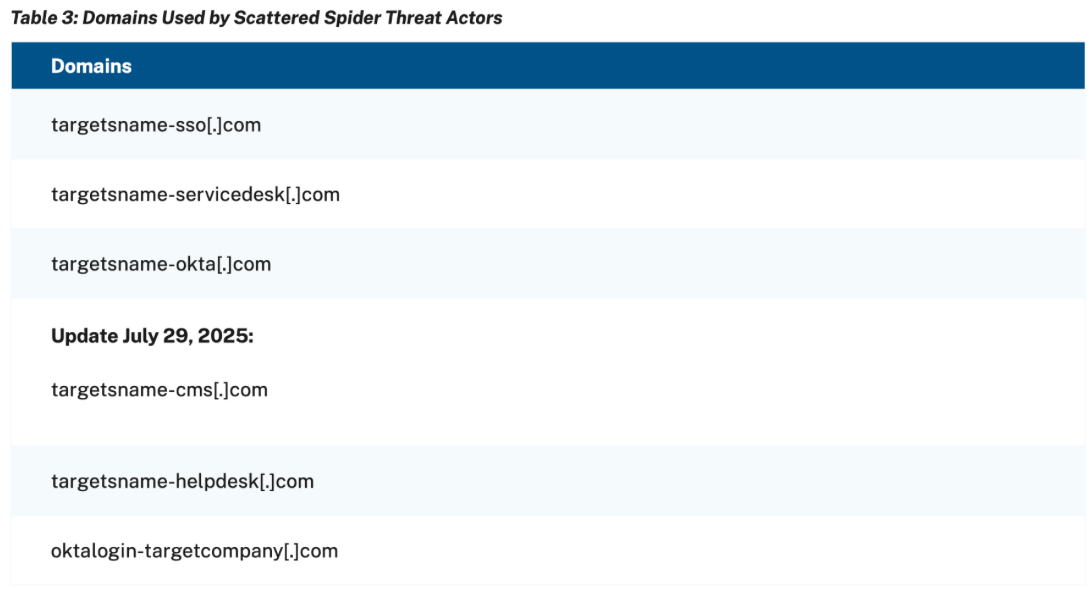

Domain usage often reveals patterns. For instance, according to CISA-Advisory aa23-320a[16], the threat group Scattered Spider mimics legitimate authentication domains to phish targets.

The behavior isn't just phishing; it also includes the specific naming convention used for evasion. This allows for proactive detectors like domain contains oktalogin- AND yourdomainname.

Other behavioral indicators exist, like knowing Microsoft exclusively uses MarkMonitor as its registrar, any Microsoft-themed domain registered elsewhere is immediately suspicious.

Caveat: Not all business units do this, for example the xbox.com domain.

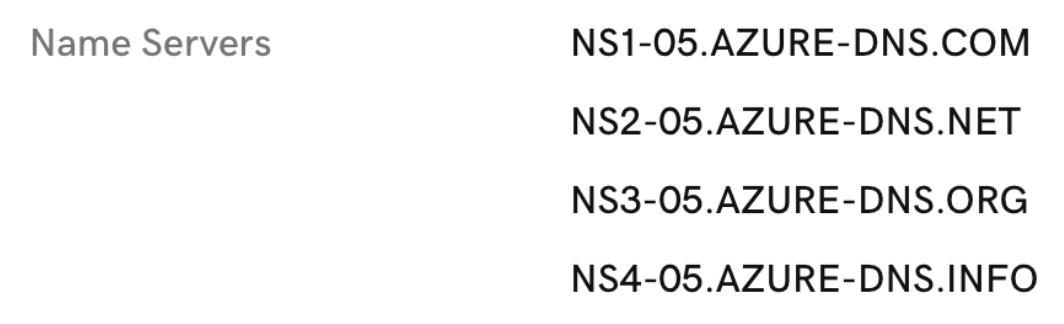

Additionally it appears that all of the Name Servers returned for official Microsoft domains follows this pattern, deviation of which should again raise a red flag.

Not all TLD's are equal

Threat actors like to abuse TLD's that are low cost or free, have extension (such as .sh), or ones that can be purchased through bulletproof registrars[17] which leads to TLD's not commonly used in the enterprise application space.

While not directly an indicator, a domain with certain TLD's is more likely to be abused than others than others. While (only slightly) exaggerating, in one of his in person courses, Chris Sanders mentioned something along the lines of just being a domain ending in .top is almost enough to be suspicious on its own.

Spamhaus[18] maintains one of the more popular TLD abuse statistic trackers: https://www.spamhaus.org/reputation-statistics/cctlds/domains/

Brand reputation monitoring

I touched on this in the last section inferring that its likely Microsoft uses MarkMonitor's brand reputation monitoring service with how invested they are in MarkMonitor as a registrar. Which leads me to my next point pro-active DNS brand reputation is incredibly powerful.

I likely have some bias as in one of my early security roles one of my duties was to investigate the alerts of our brand reputation monitoring service, and I saw first hand just how powerful it can be. From what I can recall, every single one of the clients had at least one phishing domain, with the larger ones being much more frequent. In a large majority of the cases, because it was rare for it to take more than a day to investigate each days load, we were able to proactively detect and block domains before they were able to be operationalized by the attackers against the targeted organizations.

Despite being an IOC, this usually was a high fidelity indicator of an attack, before the attacker could even operationalize the infrastructure much less attack the victim. This can make those more advanced attacks much more difficult.

If you can't afford this sort of service, there's a free tool that can do something similar known as DNSTwist[19], however a word of caution as it wont detect sub domains. You can potentially catch those through custom tooling and the use of Certificate Transparency Logs[20].

Note: This can be noisy for larger organizations or organizations with a lot of domains, which may make commercial options more attractive.

The role of IOCs in detection is changing and where I think its going

The cat-and-mouse game between attackers and defenders is only speeding up. I hypothesize that with AI helping attackers write more advanced malware and spin up infrastructure faster than ever, traditional IOCs like file hashes and IP addresses are going stale almost instantly. Attackers are abusing legitimate cloud services, making IP-based alerts prone to FP's. This constant change means the role of an indicator has to evolve.

Its important to note that they still have a place for historical lookups and threat hunts. I envision the role of indicators in detection shifting from being the sole source of an alert (with some caveats obviously), to a source of enrichment. Instead of being the alert itself, an IOC becomes a piece of context—another data point in a more sophisticated detection model that decides if something is actually malicious.

For example, enriching an IP Address with information like Geo Location, ASN, and if they are a legitimate consumer grade VPN or TOR node can be much more valuable than a list of potentially malicious IP's. For example, does your cloud infrastructure/server infrastructure need to communicate over telegram or discord? In most cases this is unlikely and a high probably indicator of compromise, yet neither of these domains are technically "malicious".

For file-based threats, static hashes are a thing of the past. While the future is fuzzy hashing, simply alerting on a similarity score can lead to false positives. The key is to use that score as context, not as a verdict. For instance, a TLSH hash might show a file is 80% similar to known malware. By itself, that’s just noise. But combined with suspicious file attributes or behaviors, it becomes a powerful signal worth investigating.

Domains will likely remain our most durable type of IOC, but their use in detection is changing. Instead of relying on IOC feeds, the future is about scoring a domain's risk based on context. We'll assess its potential threat by looking at its age, its category, like if it's pretending to be a bank, its TLD, commonly abused naming conventions such as the ones used by Scattered Spider, and other key enrichment metadata.

While our time is best spent at the top of the Pyramid, most security teams rely on some form of IOC-based detection. The key is to extract the most value from those indicators, and their true value is always defined by context. The original intent of the Pyramid of Pain wasn't to get into the weeds on specific indicators or indicator types, but more effectively communicate why as an industry should spend our time focusing on TTP based detectors, as when it was released IOC based detection reigned supreme. Now that I feel like that has been communicated effectively, my hope is that we start utilizing the lower bounds of the pyramid more effectively.

If I were to update the Pyramid to reflect this nuance among IOCs, it would look like this:

I've ranked URLs and Domains above Fuzzy Hashes primarily because they provide richer data for enrichment and more opportunities for creating custom detection logic. You can build powerful detectors based on patterns observed in a URL's structure—patterns that often lack public signatures.

In contrast, the output of a fuzzy hash typically lacks an identifiable internal structure for this kind of custom logic, relying instead on other file metadata for context. While David Bianco (creator of the Pyramid of Pain) includes URL analysis in the "Network/Host Artifacts" layer to detect attack patterns and tool marks, I've broken them out here. Malicious URLs are often consumed as simple indicators from data feeds, and I believe their unique dual role as both an indicator and a complex artifact warrants a distinct place in the hierarchy.

As a final consideration, there is significant potential in using machine learning (ML) to automatically identify subtle or non-obvious attack patterns within the URIs from your malicious URL data feeds.

Ultimately, the IOC isn't obsolete, but its purpose is changing. We're moving away from treating indicators as binary 'good' or 'bad' signals. The future is all about enrichment + context. Services like Censys[21], Shodan[22], and abuse.ch[23] are making massive datasets available to everyone, not just big security vendors. This allows all of us to become more proactive, piecing together context to find malicious infrastructure before it can be used to attack anyone.

Sources

SANS — Dennis Basilio, "On The Hunt: The Retroactive and Proactive Hunt for CTI Indicators" (Feb 2024) ↩︎

Countering Chinese State-Sponsored Actors Compromise of Networks Worldwide to Feed Global Espionage System - aa25-239a ↩︎

Another BRICKSTORM: Stealthy Backdoor Enabling Espionage into Tech and Legal Sectors ↩︎

CISA — Threat Actors Use Remote Monitoring and Management Software for Malicious Purposes (AA23‑025A) ↩︎

Trend Micro — TLSH: A Locality Sensitive Hash (Whitepaper) ↩︎

Evaluating Fuzzy Hashing in Malware Forensics (2025) — ScienceDirect Article Referenced in Text ↩︎ ↩︎

SANS Webcast — Mark Baggett (YouTube Timestamped Clip) ↩︎ ↩︎

USENIX — On the Potential of Proactive Domain Blacklisting (2010) ↩︎

Already have an account? Sign in

No spam, no sharing to third party. Only you and me.