What Does "Visibility" Actually Mean When it comes to Cybersecurity?

After my last post on EDR Telemetry, some great conversations made me realize we have a problem. We all use the word "visibility," but we're not speaking the same language. What a detection engineer considers visibility is completely different from what an incident handler needs. This post is my shot at a framework to fix that.

Does an industry standard already exist?

So, I went looking for an industry-standard definition of "visibility." Unsurprisingly, my search hit a wall of vendor marketing. Everyone is happy to sell you visibility, but nobody seems willing to define it. I checked the major compliance frameworks too, and they weren't much help. Caveat, I'm not much of a compliance guy so if I've missed anything please let me know! I'd be genuinely interested.

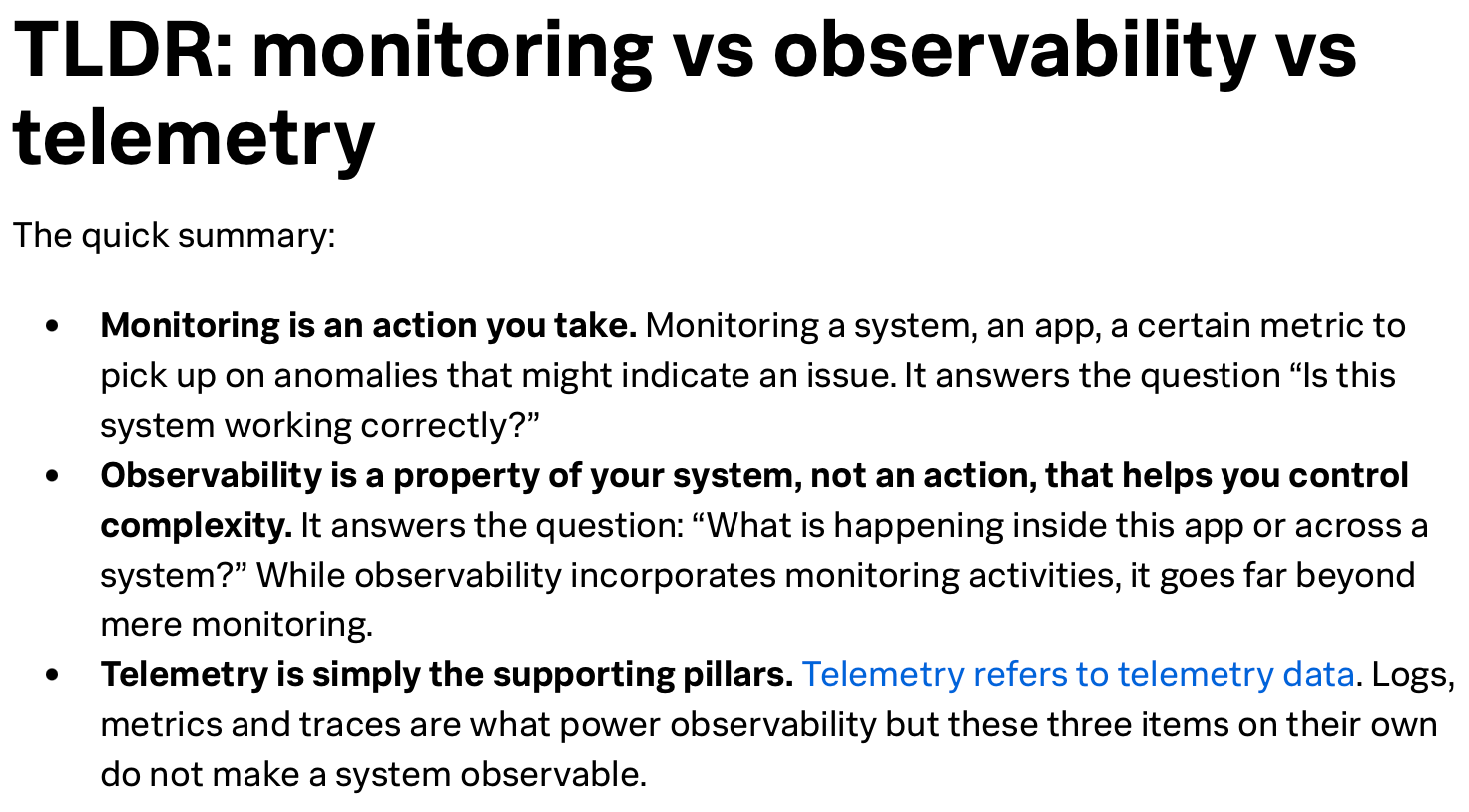

The one thing that cut through the noise was a great Splunk article, "Monitoring vs Observability vs Telemetry: What's The Difference?" that broke down the core concepts: telemetry, monitoring, and observability.

It didn't define "visibility" outright, but it gave me the perfect ingredients. It was the starting point I needed to build a practical definition, one that isn't tied to a sales pitch and I highly recommend it! It was an absolutely great read.

Why it matters

What initially caused me to start wondering what visibility even meant, is when I was writing about the EDR Telemetry project and realizing that a detection engineers definition of visibility is probably much different than an incident handler or digital forensics person doing an investigation.

For example, as a detection engineer I don't consider having visibility into something unless I can write detection logic on it. For example, when there were CISA advisories on threat actors abusing ESXi Hypervisors, if I had intel a threat actor was targeting me known to abuse those, I may prioritize the onboarding of that telemetry. If I don't have the capability to write detection logic on the telemetry though, I consider myself blind from an attacker, only able to look at something after something has already occurred.

As a SOC analyst or incident handler on the other hand that is entirely focused on investigating the output of the alert logic, I'm going to have a much different opinion on what is considered "visibility". From this perspective, as long as the telemetry is collected and I have it available to view and research for an investigation, then I may consider that as having visibility into that same telemetry.

This in my mind can lead to confusion when someone says they have "visibility" into an attack, and can open the doors to vendors being misleading both intentionally and unintentionally, miscommunication to executive leadership who may think they have detection/prevention coverage of something, when in reality they may only have the ability to retroactively look at it etc.

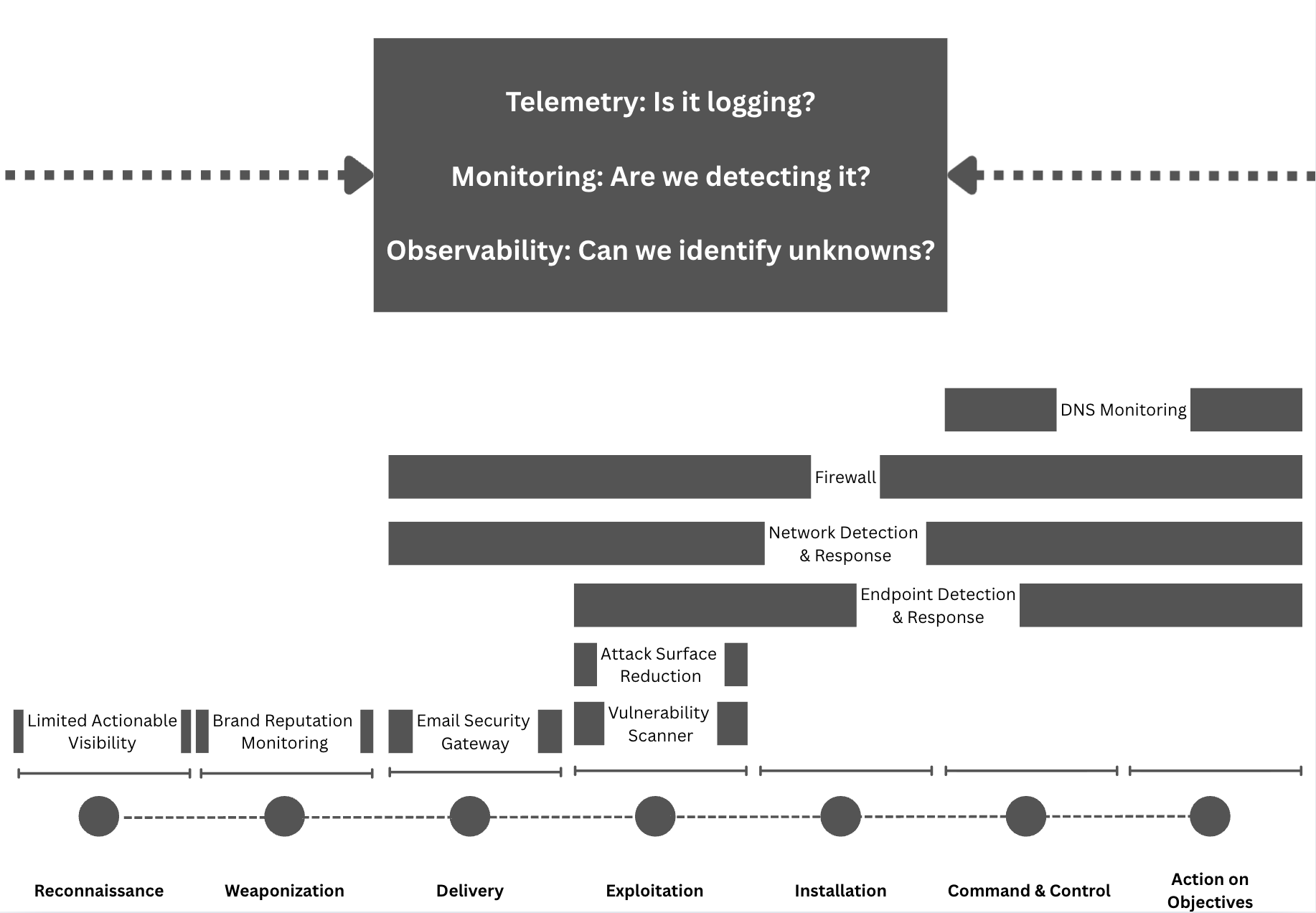

Because of this, I think applying the above definition of visibility to a model such as Lockheed's Cyber Kill Chain can help give a better view of what our actual visibility in an organization may be. I also think it's important to outline that we in the industry work at all levels and that we should be able to communicate our visibility both to the executives as well as to our fellow analysts.

Building a better definition

Existing definitions aren’t much help. CISA, for instance, calls visibility:

Visibility refers to the observable artifacts that result from the characteristics of and events within enterprise-wide environments. The focus on cyber-related data analysis can help inform policy decisions, facilitate response activities, and build a risk profile to develop proactive security measures before an incident occurs.

It’s not wrong, but I think there's some ambiguity to it that can still lead to miscommunication, and ultimately I don't think the goal of the guide was to provide or try to come up with an industry standard, rather than define how they were using it in the guide.

To solve the communication gap, I think we need to build our definition on the three pillars from the Splunk article:

Telemetry, monitoring, and observability. Lacking any one of these results in an incomplete picture. Here’s a formal definition that I think ties them together:

Visibility is the holistic state wherein a system generates telemetry , is subject to robust monitoring for known conditions, and possesses observability, enabling deep, exploratory analysis to diagnose novel problems. Full visibility is achieved only when these three elements are cohesively integrated, allowing operators to move fluidly from detecting a known issue (monitoring) to exploring its unknown root cause (observability), all supported by a common foundation of high-quality data (telemetry).

Full visibility is only achieved when you can move fluidly from detecting a known issue (monitoring) to exploring its unknown cause (observability), all built on a foundation of high-quality data (telemetry). Ultimately, it’s not about having the most data. It's about having the right data (telemetry), using it to catch known threats (monitoring), and empowering your team to hunt for novel ones (observability).

Applying the Framework: Strategic, Operational, and Tactical Visibility

A solid visibility framework has to work at every level of an organization. Security professionals need to communicate up to leadership, across to other teams, and down into the technical weeds. This is where we can break our framework into three distinct views: strategic, operational, and tactical.

Strategic Visibility

This is the 30,000-foot view for the C-Suite. Executives don't need to know about specific log sources; they need to understand business risk. Strategic visibility maps your coverage to a high-level model like the Cyber Kill Chain to answer one key question: "Are we prepared to detect and respond to the major stages of an attack?"

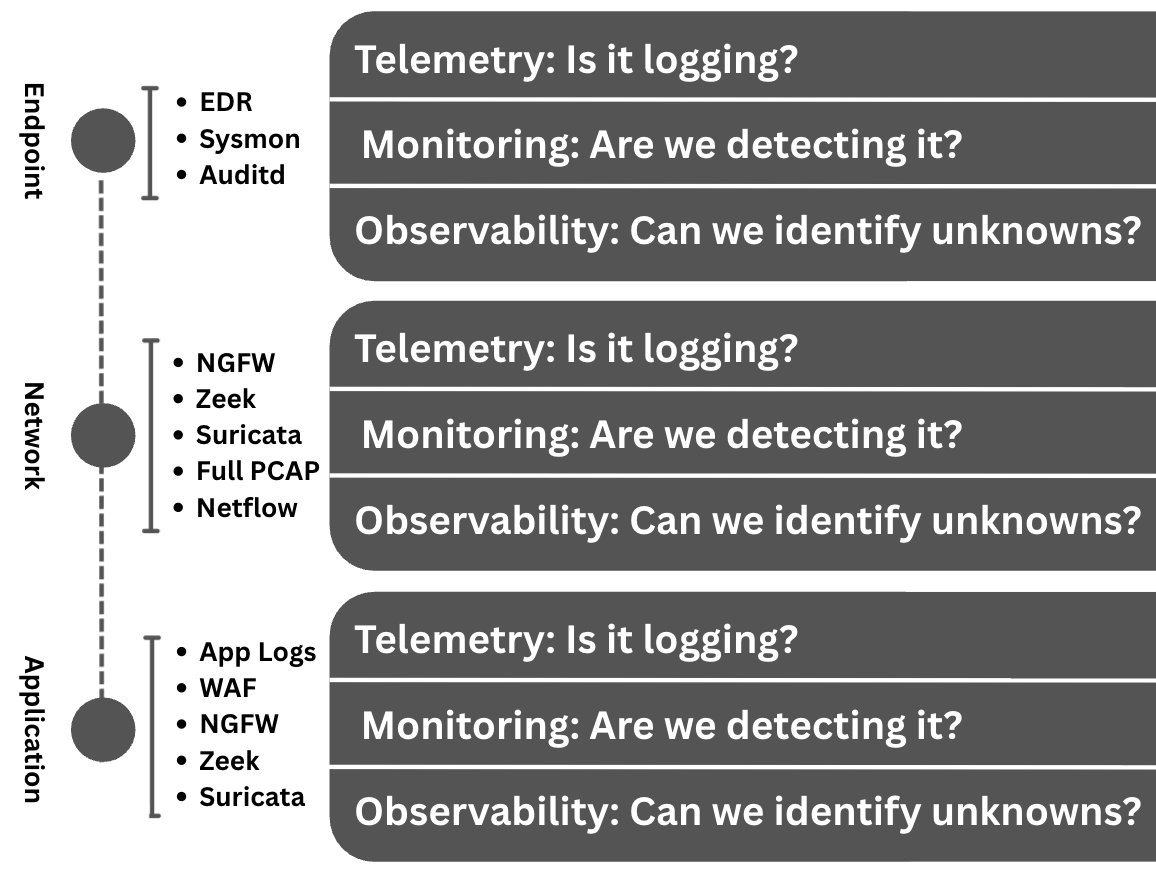

Operational Visibility

This is the view for managers and team leads. It acts as a bridge, breaking down the high-level strategy into broad technical domains. Operational visibility groups coverage by areas like Endpoint, Network, and Cloud to answer the question: "Do we have the right capabilities across our core technology pillars?"

For each domain, the questions remain the same:

- Endpoint: Are we collecting the right telemetry, actively monitoring it, and do we have the observability to investigate unknowns?

- Network: Do we have the telemetry, monitoring, and observability needed to cover network traffic?

- Application: Do we have the telemetry, monitoring, and observability needed to cover our critical applications?

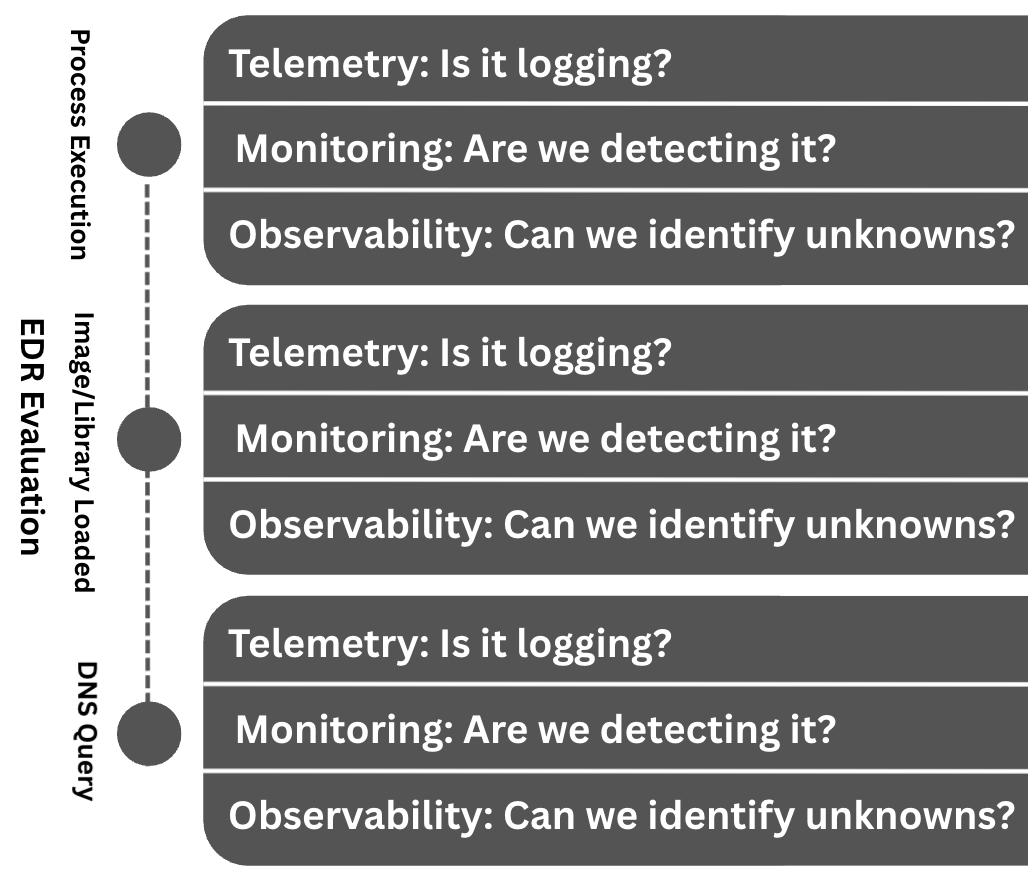

Tactical Visibility

This is the ground truth for practitioners. It's where you evaluate specific tools and data sources to answer the most granular question: "Does this EDR, log source, or tool give us the exact artifacts we need to do our jobs?"

For example, when evaluating an EDR, you're not just asking if it collects "telemetry." You're asking if it collects the specific forensic artifacts needed for monitoring (detection rules) and observability (threat hunting and incident response). This is where the framework becomes a checklist.

A mental model and the start of a framework, not a silver bullet

It’s crucial to remember that "full visibility" doesn't mean you'll detect every attack. There will always be blind spots. For instance, even the best EDR can struggle against malware that abuses low-level Windows APIs. You could have perfect visibility by this model and still miss a sophisticated adversary. There's also nuance when it comes to visibility such as log source health and its ability to checkin (maybe covered by observability?), or through the underlying method in which an artifact may be collected on an endpoint for example.

You shouldn't evaluate a security tool on visibility alone and this is a communication tool.

The goal is to give a detection engineer and an incident handler a shared language. If we can use this framework to get on the same page about what visibility actually means, we can build better defenses.

I'd love to hear your constructive feedback on this!

Already have an account? Sign in

No spam, no sharing to third party. Only you and me.